In the early days of AI, researchers relied on general-purpose CPUs, but these weren’t powerful enough to handle the massive amount of data needed for deep learning. That’s where AI chips stepped in. These specialized processors, often based on graphics processing units (GPUs), were specifically designed to accelerate the calculations crucial for artificial intelligence.

So, who are the masterminds behind these powerful chips? In this post, we are going to highlight the top AI chip companies pushing the boundaries of innovation and shaping the future of intelligent machines.

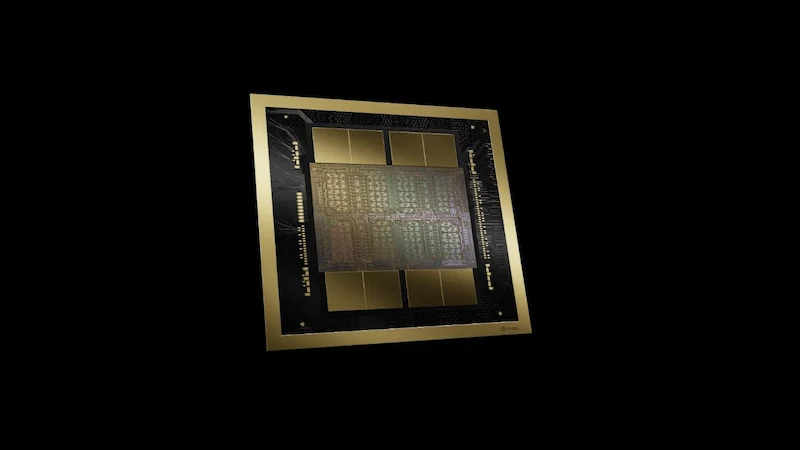

Blackwell GPU in two dies with on-chip HBM3e.

Nvidia

This tech giant market cap was around $323 billion in 2020 and is currently the 3rd company in the U.S with a market cap over $2 trillion! This significant growth alone should give you an insight on the increase in demand of faster and high performance chip.

From Humble Beginnings to Deep Learning Domination:

NVIDIA wasn’t always the AI chip kingpin. They started out as graphics card wizards, but their powerful GPUs turned out to be a perfect match for the complex calculations needed for deep learning. Think of deep learning as training a computer brain on mountains of data, like pictures or text, to recognize patterns and make predictions. Regular computer chips just couldn’t handle the sheer volume of information.

Tensor Core: This was NVIDIA’s game-changer. These specialized cores within their AI chips are like turbo boosters for deep learning tasks. Imagine a marathon runner suddenly getting a pair of rocket skates – that’s the kind of performance boost Tensor Cores provide.

The Latest and Greatest: The Rise of Blackwell

Fast forward to today, and NVIDIA is at it again. Their latest AI chip, codenamed Blackwell, is raising eyebrows (and processing power) across the tech industry. Here’s the exciting part: Blackwell isn’t just a little faster than its predecessor, Hopper. We’re talking about a significant leap in performance, allowing for even more complex AI tasks to be tackled.

What Does This Mean for You (and the Future)?

So, what does all this AI chip talk mean for us regular folks? Well, the impact is vast. From the sharper images on your phone to the smarter recommendations on your favorite streaming service, AI is woven into our daily lives. NVIDIA’s powerful AI chips are the engines driving these advancements, pushing the boundaries of what AI can achieve.

The future looks bright for AI, and NVIDIA’s latest chip launch is like pouring high-octane fuel into the engine. Expect even more impressive developments in areas like self-driving cars, medical diagnosis, and who knows, maybe even AI that can finally beat you at chess (though we can’t promise that!).

Advanced Micro Devices (AMD)

A tech titan known for its processors, who’s making serious strides in the AI chip arena.

From Graphics Cards to AI Accelerators:

AMD, like NVIDIA, started out as a leader in graphics cards. But just as NVIDIA’s GPUs proved perfect for deep learning, AMD saw the potential too. They’ve been steadily building their AI chip presence, challenging NVIDIA’s dominance with innovative solutions.

The Rise of the CDNA Architecture:

AMD’s big move came with the introduction of their CDNA architecture, specifically designed for AI workloads. This wasn’t just a minor tweak – it was a whole new approach, built from the ground up for deep learning. Their latest chip, the MI300X, is a prime example.

The MI300X:

AMD recently unveiled their most powerful AI chip yet – the MI300X. This isn’t just an incremental upgrade. The MI300X boasts significant performance improvements, especially in handling massive datasets. On raw specs, MI300X boast of a memory capacity of 1.5 TB HBM3 and memory bandwidth of 5.3 TB/s peak theoretical. In addition, the platform connects all GPUs in the 8-node ring with a peak aggregate bidirectional Infinity Fabric bandwidth of 896 GB/s. Plus, it offers a whopping 192GB of memory – that’s like having a super brain for your computer, allowing it to tackle even more complex AI projects.

Intel

Intel, a household name in processors, saw the potential of AI chips early on. The challenge? Their core business revolved around CPUs, which weren’t quite cut out for the heavy lifting needed for deep learning. But Intel’s a resourceful bunch, and they started adapting their technology for the AI arena.

The Gaudi Family: Intel’s AI Legacy:

Over the years, Intel has released several AI chips under the Gaudi name. While these haven’t quite reached the performance heights of NVIDIA’s Tensor Cores or AMD’s DNA optimization, they’ve offered a solid alternative for specific AI tasks. Think of them as the “workhorses” of the AI chip world – reliable and efficient, but maybe not the flashiest on the racetrack.

The Gaudi 3: A Big Leap Forward?

Recently, Intel unveiled the Gaudi 3, their most powerful AI chip yet. Here’s the deal: The Gaudi 3 boasts significant improvements over its predecessors, especially in terms of raw processing power. Intel claims it can even rival NVIDIA’s H100 chip in specific tasks.

But here’s the catch – “specific tasks.” While the Gaudi 3 is a big leap forward for Intel, it seems they’re still playing catch-up to the overall performance and versatility of NVIDIA and AMD’s offerings.

IBM

While NVIDIA and AMD dominate the headlines in AI chips, IBM has carved a unique path with its focus on analog in-memory computing. This approach stands in stark contrast to the traditional digital architecture of other AI chips.

IBM TrueNorth Chip

IBM wasn’t afraid to chart a different course. In 2014, they introduced the TrueNorth chip, a pioneering effort in neuromorphic computing. TrueNorth mimicked the brain’s structure with millions of interconnected cores, designed for tasks like image recognition and pattern matching. While innovative, TrueNorth’s performance lagged compared to digital AI chips.

IBM NorthPole Chip

Fast forward to today, and IBM has unveiled its latest innovation – the North Star chip.

Researchers have unveiled a revolutionary AI chip called NorthPole, boasting significant advancements in energy usage, space requirements, and processing speed. Dr. Dharmendra Modha highlights NorthPole’s breakthrough architecture, which delivers a dramatic boost in efficiency compared to conventional chips.

NorthPole Outshines Traditional Options

Tested against the industry-standard ResNet-50 model, NorthPole significantly outperforms common 12-nanometer GPUs and 14-nanometer CPUs, even though NorthPole itself is built on 12-nanometer technology. This translates to a remarkable 25x improvement in energy efficiency – NorthPole can interpret many more frames per joule of power consumed. Additionally, NorthPole boasts lower latency (faster processing) and requires less physical space to achieve the same level of performance, as measured by frames interpreted per second per billion transistors.

Cerebras Systems

Cerebras Systems isn’t your typical AI chip manufacturer. Founded in 2015, this innovative company has carved its niche by creating the world’s largest computer chips, specifically designed for tackling massive AI workloads.

Cerebras was born from the minds of five tech veterans – Andrew Feldman, Gary Lauterbach, Michael James, Sean Lie, and Jean-Philippe Fricker. These individuals previously collaborated at SeaMicro, a company acquired by AMD, demonstrating their expertise in hardware architecture.

The Wafer-Scale Engine 3:

The Wafer-Scale Engine (WSE-3), which powers the Cerebras CS-3 system, is the largest chip ever built. The WSE-3 is 57 times larger than the largest GPU, has 52 times more compute cores, and 880 times more high performance on-chip memory. The only wafer scale processor ever produced, it contains 4 trillion transistors, 900,000 AI-optimized cores, and 44 gigabytes of high performance on-wafer memory all to accelerate your AI work.

Each core on the WSE-3 is independently programmable and optimized for the tensor-based, sparse linear algebra operations that underpin neural network training and inference for deep learning. The WSE-3 empowers teams to train and run AI models at unprecedented speed and scale, without the complex distributed programming techniques required to use a GPU cluster.

Cerebras’ Impact:

By creating these supersized AI chips, Cerebras aims to accelerate advancements in fields like drug discovery, materials science, and artificial intelligence research. Their unique approach challenges the traditional mold of AI chip design and paves the way for tackling increasingly complex problems in the future.

Conclusion

More AI chips companies are coming up with their chips and the competition is far from over, we look forward to what the future holds with nothing but curiosity.